Where Intelligence Meets the Real World

Artificial Intelligence becomes truly powerful when it escapes the screen and interacts with the physical world. This fusion—often called Physical AI—combines perception, decision-making, and control to sense, understand, and act on real-world environments. From autonomous vehicles and industrial robots to smart cameras and medical devices, Physical AI systems must respond in real time, under strict power, latency, and reliability constraints.

This is where Field-Programmable Gate Arrays (FPGAs) shine.

Unlike CPUs and GPUs, FPGAs offer deterministic timing, massive parallelism, and hardware-level customization, making them a natural fit for Physical AI workloads at the edge.

What Is Physical AI?

Physical AI refers to AI systems that:

- Perceive the physical world using sensors (vision, LiDAR, radar, IMUs, microphones)

- Reason using machine learning or rule-based logic

- Act through motors, actuators, and control systems

- Close the loop in real time

Key characteristics:

- Hard real-time constraints

- Continuous interaction with the environment

- Tight integration of sensing, compute, and control

- Safety- and reliability-critical behavior

Examples include:

- Autonomous robots and drones

- ADAS and autonomous driving systems

- Industrial automation and predictive maintenance

- Smart medical and surgical devices

Advancing from Edge AI into Physical AI

While Edge AI focuses on processing data locally—enabling low latency, privacy, and resilience—Physical AI extends these capabilities into real-world action through robotics, autonomous systems, and cyber-physical integration. Physical AI systems understand context, learn from physical feedback, and operate reliably under uncertainty.

Why FPGAs for Physical AI?

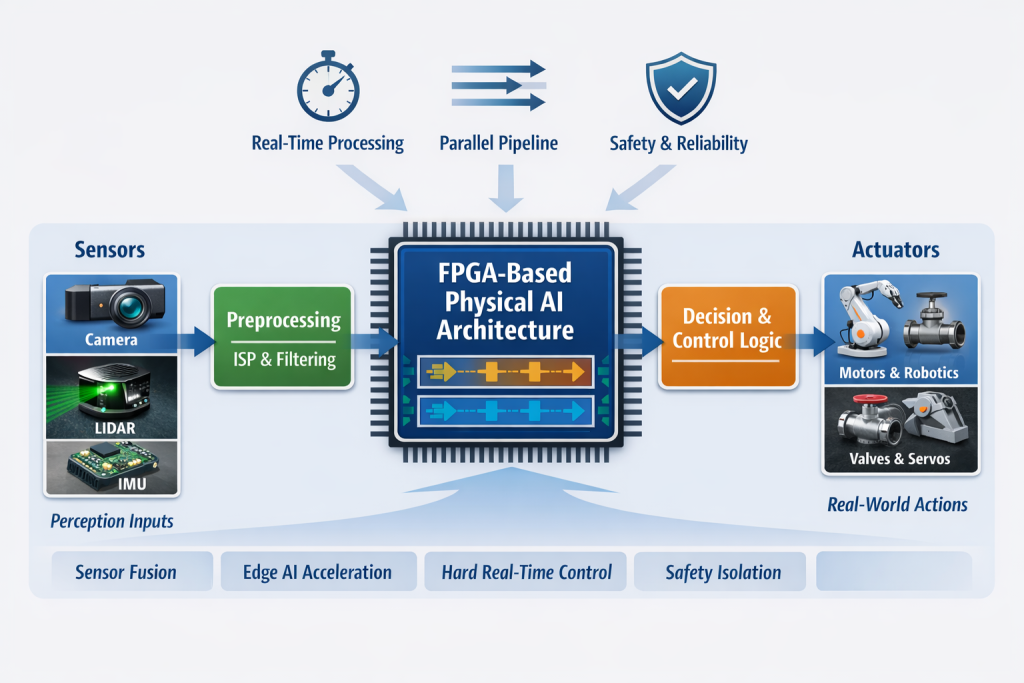

1. Deterministic Real-Time Performance

Physical systems don’t tolerate jitter. A delayed control signal can mean instability or failure.

FPGAs:

- Execute logic in fixed, cycle-accurate timing

- Avoid OS scheduling and cache unpredictability

- Enable hard real-time control loops (µs-level latency)

This is crucial for motor control, sensor fusion, and safety monitoring.

2. Massive Parallelism at Low Latency

Physical AI pipelines often look like this:

Sensor → Preprocessing → AI Inference → Decision → Control Output

On FPGAs:

- Each stage can run in parallel

- No need to serialize tasks like on CPUs

- Data flows through hardware pipelines

This enables:

- Line-rate image and signal processing

- Multi-sensor fusion with minimal buffering

- Sub-millisecond end-to-end latency

3. Efficient Edge AI Inference

Edge Physical AI systems often face:

- Power limits

- Thermal constraints

- No cloud connectivity

FPGAs enable:

- Custom AI accelerators for CNNs, transformers, or classical ML

- Mixed-precision arithmetic (INT8, INT4, fixed-point)

- Tight coupling between AI and control logic

Compared to GPUs, FPGAs can deliver better performance per watt for fixed workloads.

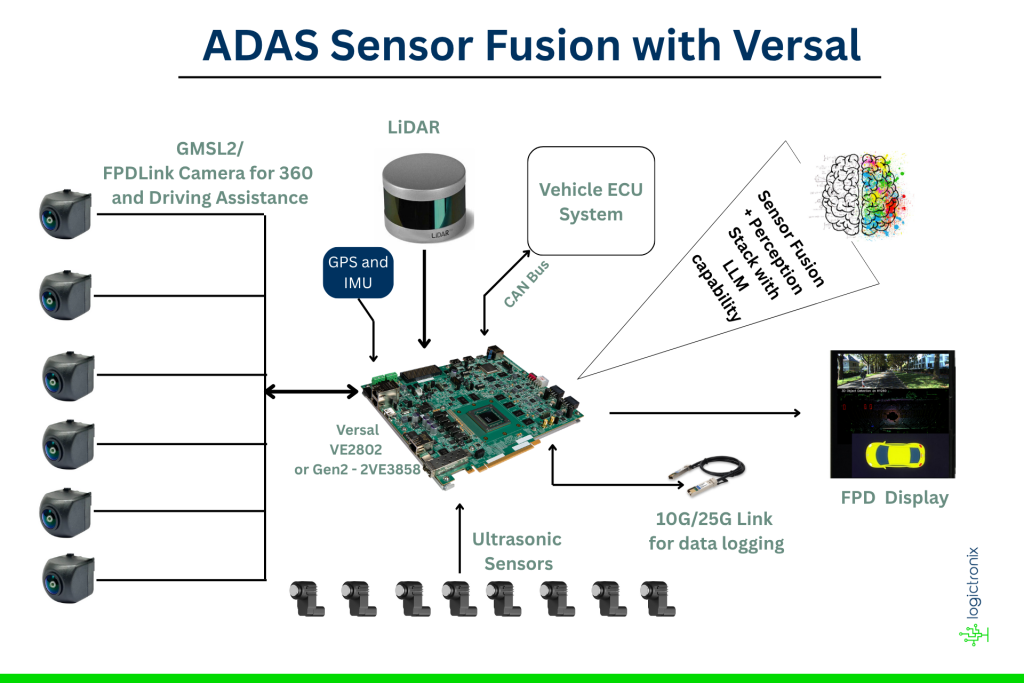

4. Sensor Fusion in Hardware

Physical AI depends on combining data from multiple sensors:

- Camera + LiDAR

- Radar + IMU

- Encoders + force sensors

FPGAs excel at:

- Timestamp alignment

- Hardware synchronization

- Low-latency data fusion

This is especially important in autonomous driving and robotics, where microseconds matter.

5. Safety, Isolation, and Reliability

Many Physical AI systems are safety-critical.

With FPGAs you can:

- Isolate safety logic from AI logic in hardware

- Implement watchdogs and fail-safe paths

- Use lockstep designs and redundancy

- Run safety monitors independent of software

This makes FPGAs attractive for ASIL and IEC 61508 compliant systems.

FPGA-Based Physical AI Architecture

A typical FPGA-centric Physical AI system looks like this:

┌─────────────┐

│ Sensors │ (Camera, LiDAR, IMU)

└─────┬───────┘

│

┌─────▼───────┐

│ Preprocess │ (ISP, filtering, FFT)

│ (HW) │

└─────┬───────┘

│

┌─────▼───────┐

│ AI Inference│ (DNN Accelerator)

│ (HW) │

└─────┬───────┘

│

┌─────▼───────┐

│ Decision & │ (FSM / RT Logic)

│ Control │

└─────┬───────┘

│

┌─────▼───────┐

│ Actuators │ (Motors, Valves)

└─────────────┘

Optionally:

- CPUs (ARM, MicroBlaze, RISC-V) manage configuration and high-level planning

- RTOS or bare-metal firmware handles orchestration

- Linux may run alongside via AMP or OpenAMP

Use Cases

Autonomous Vehicles and ADAS

- Real-time sensor fusion

- Low-latency perception

- Redundant safety paths

Robotics

- Motor control + AI inference on one chip

- Vision-based grasping

- Collision avoidance

Industrial Automation

- Predictive maintenance

- Machine vision inspection

- Deterministic fieldbus integration

Smart Edge Devices

- Intelligent cameras

- Traffic monitoring

- Medical imaging systems

FPGA + AI Frameworks

Modern FPGA Physical AI development is supported by:

- RTL based deployments using Verilog/VHDL based accelerator

- High-Level Synthesis (HLS)

- AI toolchains (e.g., Vitis AI and Custom AI/ML Acceleration flow)

- Zephyr / FreeRTOS for real-time control

- Linux for orchestration and networking

This allows teams to combine software flexibility with hardware determinism.

The Future of Physical AI on FPGAs

As AI moves from cloud to edge, Physical AI will demand:

- Lower latency

- Higher safety

- Better power efficiency

- Tighter sensor–compute integration

FPGAs uniquely sit at this intersection.

With the rise of heterogeneous SoCs (CPU + FPGA + AI engines), FPGAs are no longer just accelerators—they are becoming the central nervous system of Physical AI machines.

Conclusion

Physical AI is not just about smarter algorithms—it’s about real-time interaction with reality. FPGAs provide the determinism, parallelism, and efficiency required to make AI truly physical.

In a world where milliseconds matter and failures are unacceptable, FPGA-based Physical AI systems are not just an option—they are often the right architecture.